In Python Multi-threading is a program execution mechanism that allows several threads to be formed within a process, each of which can execute independently while sharing process resources.

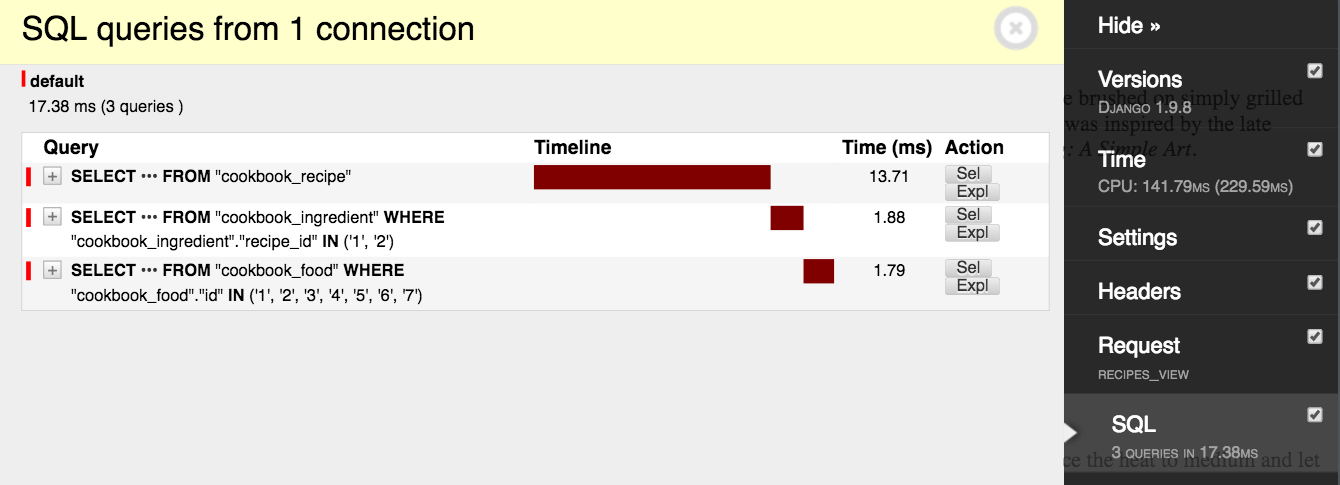

Due to timeouts, I recently experienced an issue with a long-running web process that I wanted to significantly speed up. The system had to fetch data from a lot of URLs, which caused the delay. The total amount of URLs differed from user to user, and each URL’s response time was lengthy (circa 1.5 seconds).

10-15 URL requests took more than 20 seconds to complete, and my server’s HTTP connection was timed out. Instead of increasing my timeout, I’ve switched to Python’s threading module. It’s simple to learn, quick to put into practice, and it solved my problem immediately. Flask, a Python web microframework, was used to build the system.

Python Multi-Threading For A Small Number Of Tasks

Python threading is a breeze. It allows you to handle multiple threads that are all working at the same time. You build “Thread” objects, which run target functions for you, and the library is named “threading.” You have the ability to start hundreds of threads that will run in parallel. The first method, which was inspired by several StackOverflow discussions, entails starting a new thread for each URL request. This was not the best option, but it was a good learning experience.

You must first define a “work” function that will be executed individually by each thread. The work function in this example is a “crawl” method that retrieves data from a URL. Returning data from threads is not feasible, thus in this example, we send in a “results” array that is globally available (to all threads) and the index of the array in which to store the result once obtained. The crawl() method will have the following syntax:

...

import logging

from urllib2 import urlopen

from threading import Thread

from json import JSONDecoder

...

# Define a crawl function that retrieves data from a url and places the result in results[index]

# The 'results' list will hold our retrieved data

# The 'urls' list contains all of the urls that are to be checked for data

results = [{} for x in urls]

def crawl(url, result, index):

# Keep everything in try/catch loop so we handle errors

try:

data = urlopen(url).read()

logging.info("Requested..." + url)

result[index] = data

except:

logging.error('Error with URL check!')

result[index] = {}

return TrueIn Python, we use the “threading” package to build “Thread” objects to start threads. For each thread, we may define a target function (‘target’) and a set of arguments (‘args’), and once launched, the threads will all execute the function in parallel. Using threads in this scenario will effectively cut our URL lookup time to 1.5 seconds (roughly) regardless of the number of URLs to examine. The threaded processes are started with the following code:

#create a list of threads

threads = []

# In this case 'urls' is a list of urls to be crawled.

for ii in range(len(urls)):

# We start one thread per url present.

process = Thread(target=crawl, args=[urls[ii], result, ii])

process.start()

threads.append(process)

# We now pause execution on the main thread by 'joining' all of our started threads.

# This ensures that each has finished processing the urls.

for process in threads:

process.join()

# At this point, results for each URL are now neatly stored in order in 'results'The join() function is the lone oddity here. Join() effectively pauses the calling thread (in this case, the program’s main thread) until the thread in question has completed processing. Join stops our program from moving on until all URLs have been fetched.

Unless you have a large number of jobs to do (hundreds), this strategy of creating one thread for each task will work nicely.

Python Multi-Threading Using Queue For A Large Number Of Tasks

The above-mentioned method worked well for us, with users of our web application using 9-11 threads per request on average. The threads were correctly beginning, functioning, and delivering results. Later, when customers required many more threaded processes (>400), problems developed. Python started hundreds of threads in response to such queries, which resulted in errors such as:

error: can't start new thread

File "https://shanelynnwebsite-mid9n9g1q9y8tt.netdna-ssl.com/usr/lib/python2.5/threading.py", line 440, in start

_start_new_thread(self.__bootstrap, ())Introducing Queue for Thread Management

The initial solution was not suitable for these users. The maximum number of threads that Python can launch in your environment is restricted. Queue, one of Python’s built-in threading libraries, can be utilized to get around the problem. A queue is merely a collection of “tasks to be completed.” When available, threads can take tasks from the queue, complete them, and then return for more. In this case, we needed to verify that there were no more than 50 threads active at any given time, but that we could process any number of URL requests. It’s quite easy to set up a queue in Python:

# Setting up the Queue

...

from Queue import Queue

...

#set up the queue to hold all the urls

q = Queue(maxsize=0)

# Use many threads (50 max, or one for each url)

num_theads = min(50, len(urls))Populating the Queue with Tasks

We’ll use the same method of delivering a results list along with an index for storage to each worker thread to return results from the threads. Because we will not be directly executing each “crawl” method with arguments, the index must be included in the Queue when setting up jobs ( we also have no guarantee as to which order the tasks are executed).

#Populating Queue with tasks

results = [{} for x in urls];

#load up the queue with the urls to fetch and the index for each job (as a tuple):

for i in range(len(urls)):

#need the index and the url in each queue item.

q.put((i,urls[i]))Because it now relies on the queue, the threaded “crawl” function will be changed. When the task queue is empty, the threads are set to close and return.

# Threaded function for queue processing.

def crawl(q, result):

while not q.empty():

work = q.get() #fetch new work from the Queue

try:

data = urlopen(work[1]).read()

logging.info("Requested..." + work[1])

result[work[0]] = data #Store data back at correct index

except:

logging.error('Error with URL check!')

result[work[0]] = {}

#signal to the queue that task has been processed

q.task_done()

return TrueStarting Worker Threads

The threads are given the new Queue object as well as the list for storing results. The queue jobs contain the ultimate location for each result, ensuring that the final “results” list is in the same order as the initial “urls” list. We add the following job information to the queue:

#Starting worker threads on queue processing

for i in range(num_theads):

logging.debug('Starting thread ', i)

worker = Thread(target=crawl, args=(q,results))

worker.setDaemon(True) #setting threads as "daemon" allows main program to

#exit eventually even if these dont finish

#correctly.

worker.start()

#now we wait until the queue has been processed

q.join()

logging.info('All tasks completed.')Managing Performance

Our jobs will no longer be totally processed in parallel, but will instead be divided into 50 threads. As a result, 100 urls will take around 2 x 1.5 seconds. This was okay in this case because the number of users using more than 50 threads is small. At the very least, the system is adaptable enough to deal with any situation.

Conclusion

In this article, we took a look at how to work with python threading module to implement python multi-threading in our python scripts. As always, If you have found this article useful do not forget to share it and leave a comment if you have any questions.

Happy Coding…!!!

Leave a Reply